Four quick proteomics analyses using PostgreSQL and R

I have proteomics data quantifying the levels of 2801 proteins in 24 biological samples. In addition I have metadata on the 24 samples and one or more variables of interest. A variable of interest could be anything – say, dosage of a drug that the cells were treated with, quantity of a particular protein of interest, quantitative phenotype of the patient who donated the cell line.

This post will present code for four quick analyses of these data using PostgreSQL and R as tools:

- Generate some basic descriptive statistics to understand the data.

- Is any protein’s expression level correlated with the variable of interest?

- Are any principal components correlated with the variable of interest?

- Is any protein’s expression level correlated with the variable of interest after controlling for covariates?

The motivation for these questions is as follows. Suppose we’re trying to figure out what protein drives a phenotype, or what protein(s) affected by a drug – #1 tries to answer that. But a phenotype (or a drug’s effect) may be bigger than just one or a few proteins and may have an impact on the global protein expression profile across cells – #2 tries to determine whether that is the case. Finally, if the cell lines have very different protein expression profiles for whatever reason, that might muddy the answer to #1, so #3 re-asks the question from #1 with a control for a few principal components.

0. Generate some basic descriptive statistics to understand the data.

I first introduced my proteomics dataset in this post. When I got it, it was already finished data – the protein levels had already been ‘called’ and I never got to see the raw mass spec data. After cleaning up the data with a few unix command line tools, I have a 2801×53 table. Rows: 2801 proteins. Columns: uniprot protein id, protein description, iTRAQ protein fragments, and then 50 columns of sample protein quantification. Those 50 columns represent 50 ‘runs’ which are 24 biological samples with 2 technical replicates each, except lucky sample 1 which got 4 technical replicates.

Our proteomics company, Proteome Sciences, did not provide any information on their data normalization procedure nor on the meaning of missing values in their data. I wrote to them through their contact us page asking these 2 questions, and they never wrote back. Absent any guidance from the people who created the data, my first question was to see if I could get some idea of how the data had been normalized. Here’s what I found. I read the table into R and by slicing the data in columns versus in rows, I could see they had normalized within each run, not within each protein.

> prot = read.table('run-protein-levels.txt',sep='\t',header=TRUE)

> dim(prot)

[1] 2801 53

# different columns (runs) all have pretty much the same mean:

> mean(prot[,8],na.rm=TRUE)

[1] -0.08057101

> mean(prot[,9],na.rm=TRUE)

[1] -0.08055821

> mean(prot[,10],na.rm=TRUE)

[1] -0.08056104

# different rows have different means:

> x = prot[12,4:53]

> sum(x,na.rm=TRUE)/sum(!is.na(x))

[1] -0.09357906

> x = prot[13,4:53]

> sum(x,na.rm=TRUE)/sum(!is.na(x))

[1] -0.04419777

> x = prot[14,4:53]

> sum(x,na.rm=TRUE)/sum(!is.na(x))

[1] -0.07317855

> x = prot[15,4:53]

> sum(x,na.rm=TRUE)/sum(!is.na(x))

[1] -0.2118259

I can’t figure out what ‘normalization’ procedure they followed. The data are far from normal (these figures are almost exactly the same for every run). (Skewness and kurtosis are from the fBasics package for R):

> min(prot[,8],na.rm=TRUE) [1] -3.346263 > max(prot[,8],na.rm=TRUE) [1] 1.537259 > mean(prot[,8],na.rm=TRUE) [1] -0.08057101 > sd(prot[,8],na.rm=TRUE) [1] 0.2176508 > library(fBasics) # for skewness & kurtosis > skewness(prot[,8],na.rm=TRUE) [1] -2.534116 attr(,"method") [1] "moment" > kurtosis(prot[,8],na.rm=TRUE) [1] 34.84668 attr(,"method") [1] "excess"

A min of -3.35, max 1.54, considerable skewness and loads of positive excess kurtosis. For every run. Bizarre indeed. Perhaps there is some logic to this, but without knowing what that logic might be, I may just end up z-scoring everything later.

There are 3998 missing values, about 2.8% of the whole dataset:

> sum(is.na(prot)) [1] 3998 > sum(is.na(prot))/(50*2801) [1] 0.02854695

And no indication of why NA values arise: does it mean “no protein detected” or “an error occurred and no measurement was obtained” or something else? This interpretation is important for some downstream analyses; luckily as we’ll see the number of cases where both technical replicates are NA is fewer, few enough that it probably doesn’t matter too much how I handle them.

A final important QC measure: technical replicates of the same sample should be quite similar to each other – more similar than, say, two different samples are to each other. If technical replicates were no more similar than two samples are, then I’d say noise overwhelms signal in the dataset.

My column names reflect the sample id and then the technical replicate number, but aren’t in order, so first I’ll sort the columns by name and then z-score them all:

protz = prot[,order(names(prot))] # create a new dataframe with columns sorted by name

# loop through and z-score every column

for (i in 4:53) {

protz[,i] = (prot[,order(names(prot))][,i]-mean(prot[,order(names(prot))][,i],na.rm=TRUE))/sd(prot[,order(names(prot))][,i],na.rm=TRUE)

}

And then I’ll loop through comparing each pair of technical replicates and storing the Pearson correlation values in a vector:

technical.cors = vector(mode="numeric",length=25) # to store results

indices = seq(4,53,2) # list of every other index, i.e. first of each pair of technical reps

for (i in indices) {

technical.cors[which(indices==i)] = cor.test(protz[,i],protz[,i+1])$estimate

}

Result: the correlation between pairs of technical replicates ranges from .56 to .79, average .66. Now as for correlation between different samples:

npairs = choose(50,2) - choose(4,2) - 23 # number of pairs of replicates from different biological samples

sample.cors = vector(mode="numeric",length=npairs) # to hold results

counter = 1

for (i in 4:53) {

for (j in 4:53) {

if (i < j && substring(names(protz)[i],1,3)!=substring(names(protz)[j],1,3)) { # if sample names are different

sample.cors[counter] = cor.test(protz[,i],protz[,j])$estimate # store a correlation result

counter = counter + 1

}

}

}

mean(sample.cors)

min(sample.cors)

max(sample.cors)

sum(sample.pvals < .05)/npairs

Result here: average Pearson’s correlation between two replicates from different biological samples is .06, minimum -.24, max .51. Only 75% of the correlations are even nominally significant at p < .05.

This is most curious. Recently I asked a similar question of some microarray data and found that gene expression profiles had a correlation of ~.86, pretty consistently, between different biological samples. That makes sense to me: provided your samples are all from the same tissue or cell type, some genes will be highly expressed and others not very highly expressed, in every sample. To have only .66 correlation even between different technical replicates of the same sample and then to have almost no correlation at all between different samples seems odd to me. Does this mean the data are just very noisy? Or might it be a result of the normalization procedure? I welcome any suggestions.

I’d also be curious for thoughts on the best way to handle the technical replicates, given that they’re not always so concordant. For instance I had thought of throwing out data points (i.e. sample-protein combinations: cells in the matrix) where technical replicates disagree by more than some threshold amount. But for now I am just going to do the simplest possible thing, and average them. Where one is missing I’ll just keep the other, thus cutting down on my number of NA values.

To combine columns like this in R, which and rowMeans are helpful:

# create a new data frame based on the old one

protz_sampleagg = protz[,1:27] # 3 descriptor cols + 24 samples

# name the columns in the new array according to unique sample ids

names(protz_sampleagg) = c(names(protz)[1:3], sort(unique(substring(names(protz)[4:53],1,3))) )

for (i in names(protz_sampleagg)[4:27]) { # loop through sample ids

# get indices of columns corresponding technical repolicates for this sample

whichcols = which( substring(names(protz),1,3) == i )

# take average, removing missing vals

protz_sampleagg[i] = rowMeans(protz[,whichcols],na.rm=TRUE)

}

Or a different way of achieving the same thing: since I already have this matrix of protein levels relationalized into a PostgreSQL database (per these instructions), I can group by sample id and uniprot id, take the average, and then use my automatic pivot table function to cast it back into a matrix. After creating the pivot table function, I just do this:

drop table if exists avglevels;

create table avglevels as

select ps_sampleid, uniprotkb_ac_full_old, avg(level) avlevel from proteomics_levels pl, proteomics_runs pr where pl.runid = pr.runid group by 1,2 order by 1,2;

select pivotcode('avglevels','uniprotkb_ac_full_old','lpad(ps_sampleid::varchar,2,''0'')','max(avlevel)','numeric');

(BTW, lpad is to turn sample ids such as ’1′ into ’01′). I then slightly modify the output, changing the lpad statement back to just ps_sampleid, to get this query:

-- slightly modified the output before running: had to change 'lpad(ps_sampleid::varchar,2,''0'')' back to just ps_sampleid in the two quoted subqueries:

select * from crosstab (

'select uniprotkb_ac_full_old,ps_sampleid,max(avlevel) from avglevels group by 1,2 order by 1,2',

'select distinct ps_sampleid from avglevels order by 1'

)

as newtable (

uniprotkb_ac_full_old varchar,_01 numeric,_02 numeric,_03 numeric,_04 numeric,_05 numeric,_06 numeric,_07 numeric,_08 numeric,_09 numeric,_10 numeric,_11 numeric,_12 numeric,_13 numeric,_14 numeric,_15 numeric,_16 numeric,_17 numeric,_18 numeric,_19 numeric,_20 numeric,_21 numeric,_22 numeric,_23 numeric,_24 numeric

);

And that query will return a matrix which you could write out to disk and read back into R, or query directly from R via RPostgreSQL. The PostgreSQL and R results should be identical, with two caveats: if you z-scored, you need to have done it in the same order both times (always before or always after combining technical replicates), and depending on your number formats R may have given you a lexical sort instead of a numeric sort on the column names.

Either way, at this point we have a table with rows as proteins and columns as samples. My goal in the next analysis will be to ask whether any protein’s level correlates with a variable of interest. That’s easier to do if the proteins are columns, so I may want to transpose my matrix:

tprot = data.frame(t(protz_sampleagg[,4:27])) # transpose the actual protein levels portion of the matrix

colnames(tprot) = as.character(protz_sampleagg[,1]) # use the uniprot symbols from the first column as the new col names

Also useful to note: the process of aggregating by sampleid has greatly reduced the number of missing values in the dataset – above it was ~2.8% of the matrix, now it’s just 0.25%:

> sum(is.na(tprot)) [1] 169 > sum(is.na(tprot))/(24*2801) [1] 0.002513983

And with that I’m ready for Question 1.

1. Is any protein’s expression level correlated with the variable of interest?

To answer this question I will simply loop through the proteins and correlate each one with my variable of interest. Before you do this: check and then double check that the samples are sorted in the same order in the variable of interest vector as they are in the protein level matrix. If they’re different (for instance, one is sorted numerically and the other lexically) it is incredibly easy to get a false negative.

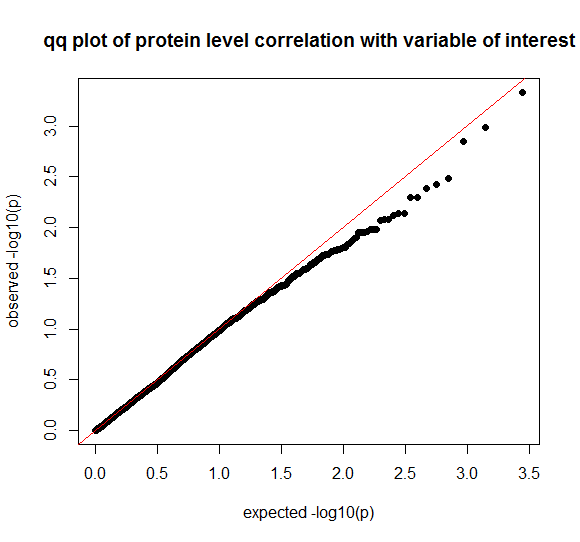

Once that’s done, this R script will loop through proteins and do all the correlations, check for lowest p value and make a qq plot of all the p values.

# set up vectors to hold correlation results

p = vector(length=2801,mode="numeric") # p value

cor = vector(length=2801,mode="numeric") # rho

protsym = vector(length=2801,mode="character") # uniprot id

for (i in 1:2801) { # loop through all quantified proteins

correl = cor.test(tprot[,i],var_of_interest) # do a pearson's correlation

# extract relevant numbers from the pearson's correlation result

p[i] = correl$p.value

cor[i] = correl$estimate

protsym[i] = as.character(names(tprot)[i])

}

# compile into a data frame

results = data.frame(protsym,cor,p)

# lowest p value

min(p)

# lowest p value after bonferroni correction

min(p*2801)

# make a qq plot of p values

n = 2801

observed = sort(results$p) # actual p values for proteins

expected = seq(1/n,1,1/n) # under null, p values are uniformly distributed between 0 and 1

o = -log10(observed) # log transform the p values

e = -log10(expected) # log transform the expected p values

plot(e,o,pch=19,xlab="expected -log10(p)",ylab="observed -log10(p)",main="qq plot of protein level correlation with variable of interest")

abline(a=0,b=1,col="red") # add a line representing the null

I tend to do my QQ plots “manually” as the above code does, but Stephen Turner has posted some nice functions for automatic QQ plots in R here and here. update: qqunif from the gap library is the best solution for automatic QQs that I have seen so far.

And just to be able to show an example QQ plot here, I made up a set of fake ‘observed’ values using one line:

observed = sort(runif(n=2801,min=0,max=1))

Which gives you uniformly distributed p values between 0 and 1, which is what you’d see under the null hypothesis. So if you run the above code using these ‘observed’ values you’ll probably see something like this:

Which would be wholly consistent with the null hypothesis that no protein is significantly correlated with the variable of interest.

2. Are any principal components correlated with the variable of interest?

In addition to knowing whether any one protein is correlated with the variable of interest, you might wonder whether the variable of interest explains a good fraction of the overall variance in protein expression profiles among your samples, or exerts a sort of protein level ‘signature’ across the dataset. It seems to me that a simple way to assess this would be to create principal components from the matrix and then ask whether any principal components are correlated with the variable of interest.

For instance, I’ve posted code for how to create population covariates in exome analysis using MDS plotting, which is similar to principal components analysis. If you do MDS plotting on a combined dataset of two sets of people genotyped using two different methods (say, different sequencing platforms, or one set from sequencing and one set from a SNP array) you sometimes find that the first MDS dimension (i.e. first principal component) explains a lot of variance simply by separating the two genotyping methods. That’s useful information, even if interpreting it (is one genotyping method especially error prone? did I have strands flipped on some SNPs? or is this a normal level of discordance between methods? etc.) is not trivial.

I implemented principal components analysis in R following this awesome tutorial by Kurt Hornik. An important point is that PCA cannot tolerate missing values. There’s an excellent stats.stackexchange discussion about what to do about this. The key, of course, is to know what the missing values represent in the real world, a question which my proteomics provider never answered. But as one user pointed out, if the number of missing values is small it doesn’t matter that much what you do with them. Since only 169 cells (0.25%) are missing for me, I’m going to fill them with the column mean – the average expression level for that protein:

tprot_fill = tprot # copy matrix

for (colno in 1:2801) { # loop through columns

avlevel = mean(tprot[,colno],na.rm=TRUE) # get column mean

tprot_fill[,colno][is.na(tprot_fill[,colno])] = avlevel # fill missing cells with column mean

}

And then here’s the PCA code, inspired by the above-cited tutorial:

covmatrix = cov(tprot_fill) # calculate covariance matrix

eigenvalues = eigen(covmatrix)$values # get eigenvalues

eigenvectors = eigen(covmatrix)$vectors # get eigenvectors

pc = as.matrix(tprot_fill) %*% eigenvectors # multiply matrices to get principal components

# double check that covariance of the PCs equals the eigenvalues on the diagonal and is ~0 elsewhere

cov(pc)[1:3,1:3]

eigenvalues[1:3]

# examine results

(eigenvalues/sum(eigenvalues))[1:10] # get fraction of variance explained by each of first 10 PCs

cumsum(eigenvalues/sum(eigenvalues))[1:10] # running total of variance explained by first 10 PCs

Once you’ve got these, you may find it of interest to plot the 24 samples in the space of the first 2 PCs and see what it looks like:

plot(pc[,1],pc[,2],pch=19,xlab="PC1",ylab="PC2",main="First 2 PCs of 24 proteomics samples")

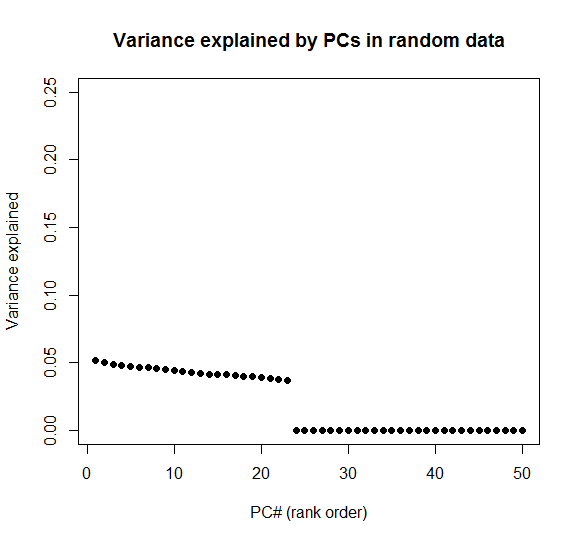

You may also learn something interesting about your data by looking at the distribution of variance explained by the various PCs. Since I have 24 samples, I’ll only get n-1 = 23 meaningful PCs (though the above code actually returns 2801 PCs, cols 24:2801 explain ~0 variance), but I plot the first 50 of them because the dropoff after #23 reminds you where zero is.

plot(seq(1,50,1),eigenvalues[1:50]/sum(eigenvalues[1:50]),pch=19)

For comparison, here is code create a matrix of completely random data and then calculate PCs:

randommatrix = matrix(rnorm(n=24*2801,m=0,sd=1),nrow=24)

covmatrix=cov(randommatrix)

eigenvalues = eigen(covmatrix)$values # get eigenvalues

xlab = "PC# (rank order)"

ylab = "Variance explained"

main = "Variance explained by PCs in random data"

ylim = c(0,.25)

plot(seq(1,50,1),eigenvalues[1:50]/sum(eigenvalues[1:50]),pch=19,xlab=xlab,ylab=ylab,ylim=ylim,main=main)

And it will give you a plot something like this:

The first PC explains about 5.0% of variance, then it drops off gradually to PC23 which explains 3.6% of variance, then after that it abruptly drops off to zero. Interpretation: it takes all 23 PCs to explain the random data. Random data has high information entropy and cannot easily be condensed down to just a few PCs. In contrast to this result for randomly generated data, I find that in real datasets, PC1 usually explains much more than 5% of variance and the variance explained by each PC drops off more gradually to zero before you even hit the theoretical limit of n-1. This is just an observation; I am still looking for a good statistics reference to explain how to more meaningfully interpret these facts. In any event, it may be worth plotting the variance explained by proteomics PCs.

The real question here was whether the variable of interest is correlated with one of the PCs. Here’s a loop to test them all, though if the variable of interest is really quite important I would expect it to be represented in the first one or two PCs.

variance_explained = eigenvalues/sum(eigenvalues) # calculate variance explained by each PC

lastpc = max(which(variance_explained>.001)) # index of last PC that explains more than 0.1% of variance

cors = vector(mode="numeric",length=lastpc) # to hold results

pvals = vector(mode="numeric",length=lastpc) # to hold results

for (i in 1:lastpc) {

correl = cor.test(pc[,i],var_of_interest)

pvals[i] = correl$p.value

cors[i] = correl$estimate

}

min(pvals) # smallest p value - do any look extremely significant?

which(pvals==min(pvals)) # and if so, which?

3. Is any protein’s expression level correlated with the variable of interest after controlling for covariates?

One possible outcome of your above analysis in Question 2 is that you find that your first PC or two explain a lot of variance but are not correlated with the variable of interest. Perhaps those PCs reflect something else about differences in cell culture across samples, or how the sample was processed, or population stratification among the people who donated samples. Certainly you could imagine that sex might matter, and that might be captured in one of the PCs or you might want to throw it in separately as a covariate. I’ll assume the latter case here.

# vectors to hold results

p = vector(length=2801,mode="numeric")

est = vector(length=2801,mode="numeric")

protsym = vector(length=2801,mode="character")

for (i in 1:2801) { # loop over proteins

m = lm(tprot[,i]~var_of_interest+sex+pc[,1]+pc[,2]) # linear model to explain protein level

p[i] = summary(m)$coefficients["var_of_interest","Pr(>|t|)"] # extract p value

cor[i] = summary(m)$coefficients["var_of_interest","Estimate"] # extract coefficient

protsym[i] = as.character(names(tprot)[i])

}

results2 = data.frame(protsym,cor,p) # assemble into a data frame

min(results2$p) # lowest p value

min(results2$p)*2801 # lowest p value after bonferroni correction

And of course you could qq plot the p values as demonstrated earlier.

In fact, sex can be not only a covariate but also a useful QC check. One would certainly expect at least some proteins should be significantly higher in women than men or vice versa. So we can loop through again pulling out the p value for sex correlation with proteins, and if none of those are significant that would be a bad sign:

for (i in 1:2801) { # loop over proteins

m = lm(tprot[,i]~var_of_interest+sex+pc[,1]+pc[,2])

p[i] = summary(m)$coefficients["sex","Pr(>|t|)"] # this time pull out p value for sex

cor[i] = summary(m)$coefficients["sex","Estimate"] # and its coefficient

protsym[i] = as.character(names(tprot)[i])

}

min(p)

min(p)*2801

If your Bonferroni-corrected lowest p value for sex vs. protein level is not significantly, I’d be rather worried about the noisiness of your dataset.

conclusion

This was just a few quick analyses to get started analyzing some proteomics data. You could certainly do more sophisticated stuff with these data, and by combining these with gene expression data from microarray or RNA-seq.

update

Thanks to my colleague Ashok Ragavendran for suggesting several corrections and improvements to the this post – edits above plus these two points:

1. It would be valuable to have an interaction term model with my variable of interest and sex. I didn’t realize that in lm, * is shorthand for including each variable separately and an interaction term, so for each protein you can do either:

m = lm(tprot[,i]~var_of_interest*sex)

or

m = lm(tprot[,i]~var_of_interest+sex+var_of_interest:sex)

2. As for multiple testing, Bonferroni correction is probably far too conservative. The built-in R function p.adjust includes Benjamini-Hochberg FDR correction which is probably more appropriate:

p.adjust(p,method="hochberg")

But I don’t fully understand the math behind it yet and hate using tools as black boxes – if anyone knows a good tutorial on how to do FDR correction “manually” in R (equivalent to this tutorial on PCA) please let me know.