The magic of blur and contrast

Last week’s literature review has really paid off. A few key ideas that emerged from it for me were:

- Blur. Image segmentation has been shown to work on more zoomed-out H&E images where the objects are too small to have internal texture. To mimic this effect with zoomed-in images, blur away the internal texture before segmentation.

- Contrast enhancement. Rescale the image to use the full intensity range and/or use nonlinear transformations to make the neurons bright enough that an algorithm can pick up both the neurons and the astrocytes at the same time.

- Size filters. Filter the objects by size after segmentation to separate neurons from astrocytes.

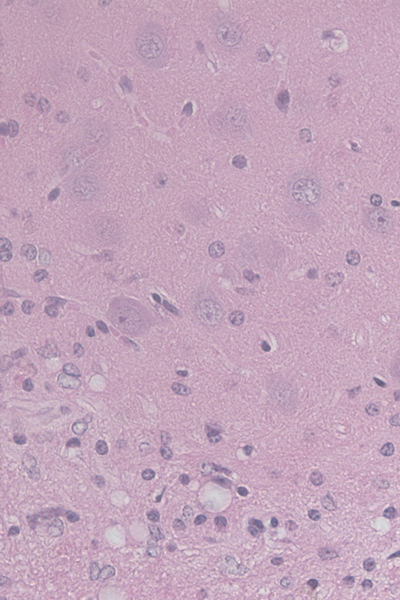

I started to roll these concepts into a new CellProfiler pipeline and was almost immediately impressed by the results. This level of segmentation accuracy still probably isn’t good enough for any serious feature analysis, but it’s a whole lot better than what I was getting. Here are preliminary results:

You can download the pipeline here (.txt; rename to .cp after you download). (N.B. the above image has been downsampled for web viewing; as a result the pipeline won’t work on it)

The basic concept of the pipeline is:

- Deconvolute colors and use the hematoxylin signature

- Blur

- Enhance the contrast

- Blur again

- Segment

I spent some time playing with the modules and their parameters, with varied results:

- Smoothing: The “smooth keeping edges” filter preserves the edges of the astrocytes better and thus results in a better segmentation. But it also keeps too much of the internal texture of the neuron nucleoli, leading to split objects. Tweaking the edge intensity difference parameter looks promising but I couldn’t manage to get it just right. The Gaussian filter, meanwhile, makes the neurons easier to segment (particularly with larger filter sizes, say >10) but then causes some mergers and overly generous edges on the astrocytes.

- Rescaling intensity: The default settings work pretty well here. But I also tried setting a custom intensity range to expand. This is tough because the neurons are barely brighter than the background. Their intensity ranges overlap within the .33-.36 range. This is why I ended up putting a blur before the rescaling, to try to get the neurons more uniformly bright before rescaling.

- Threshold correction: The threshold correction factor on IdentifyPrimaryObjects matters a lot. Setting it to .8 or so gives really good astrocyte recognition but loses some neurons. Setting it all the way down to .2 finds many neurons but gives the astrocytes too large of borders.

So I think I’m getting a lot closer here but the details matter a lot. I think the next step to really nail this segmentation will be to adapt the machine learning script to vary the parameters of not only the IdentifyPrimaryObjects module but also the RescaleIntensity and Smooth modules.