Five years of screening, not one advanceable hit

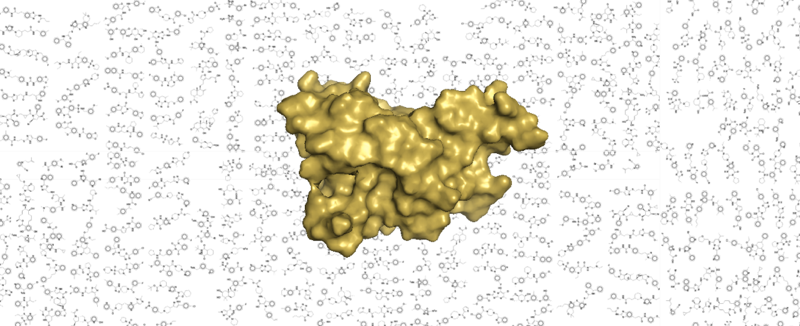

If you’ve followed this blog lately, you know that we’re hot on the trail of PrP-lowering antisense oligonucleotides. The overarching conclusion of our in vivo studies is that they work. What’s more, we think they could have a promising new clinical path ahead. In a new paper out today, we describe something else we tried that didn’t work: a wide variety of efforts undertaken over five years aimed at identifying small molecules that bind prion protein (PrP) [Reidenbach 2020]. Long story short, we put in a tremendous amount of effort, and we have no advanceable compounds to show for it. In fact, we barely even have a hit. You can read all the details in the paper, but I wanted to use this blog post as a chance to reflect on the road to here from a patient-scientist perspective.

first, a brief summary

In the paper we describe four screening efforts: a fragment-based drug discovery campaign using NMR, a thermal shift screen using DSF, a DNA-encoded library selection, and an in silico screen. We also describe the follow-up and validation efforts undertaken on the hits from each screen. From all this, at the end of the day, we got just one fragment hit that appeared to validate. It caused dose-responsive perturbations in PrP by NMR and a temperature shift in DSF. As far as we could tell, by every assay we threw at it, it appeared not to be an aggregator or some other artifact. But its affinity was incredibly weak (Kd on the order of 1 mM) and none of the close analogues we tested were any better, so in the end we felt there was no real starting point there for developing a better binder.

The most remarkable thing about our results is just how little traction we got on even beginning to find a PrP binder. People advocate for fragment-based drug discovery because while it may not give you a strong hit right away, it is supposed to give you at least some kind of starting material. People will point to examples where a target fairly refractory to other screening approaches, such as beta secretase (BACE1), was finally tackled through fragments [Stamford & Strickland 2013]. In our fragment campagin for PrP, we had a 1.6% primary hit rate, but all the hits were super weak to begin with. Never once did we see anything that jumped off the page, and when we went back to validate even by other NMR methods (let alone truly orthogonal methods), almost every hit invalidated instantly.

some history

I still remember the frantic hunger that I felt in 2012 and 2013 to run a small molecule screen. Sonia and I had just changed careers, fresh from her genetic diagnosis, and getting chemical matter in hand felt like the most tangible progress we could possibly make. What seemed at first like an impossible pipe dream gradually took shape as a reality. By mid-2012 we both had jobs in scientific research labs, and by 2014 we’d started a PhD program. Stuart Schreiber took us under his wing as mentees, the Broad Institute created us a lab space, and I won an F31 fellowship from NIH to develop small molecules targeting PrP. By mid-2015, we were beginning to undertake some of the efforts that we would eventually describe in this 2020 paper.

From early on, our efforts did not yield a ton of success, but this didn’t convince us to quit, because you have to remember, failure was expected. Anyone who has worked in drug discovery will tell you that most programs fail, so you have to have patience and try a lot of things and hope for one to succeed. Plus, we knew that we at least had the right target — PrP is beyond a shadow of doubt the causal protein in prion disease — so while there was technical risk in whether we could identify chemical matter, the project at least had lower scientific risk than many. Moreover, we were incredibly lucky in other regards, and kept finding opportunities too good to pass up. At Broad we had access to compounds, screening technologies, and above all, expertise and advising, that would have been really hard to come by anywhere else. We got incredibly generous in-kind contributions of instrument time, compounds, and again, expertise and experience from Novartis, and later from Atomwise. A brilliant crystallographer, Cristy Nonato, came to do a year-long sabattical in our lab. We were able to keep our work funded, and by the time I got too busy with the antisense and biomarker work, we had hired a smart, enthusiastic research scientist, Andrew Reidenbach, who was able to take up the torch of our small molecule program along with other projects in our lab. And so, year after year, despite not having so much as a hundred micromolar hit to our names, we kept putting one foot in front of the other.

But during the five years where our small molecule program took us nowhere, our other efforts were wildly successful. We developed biomarkers [Vallabh 2019, Minikel & Kuhn 2019]. We launched a patient registry and a clinical research study [Vallabh 2020b]. We answered some key genetics questions [Minikel 2014, Minikel 2016, Minikel 2019]. We partnered with Ionis Pharmaceuticals to kickstart a PrP-lowering antisense oligonucleotide into preclinical development [Raymond 2019, Minikel 2020]. We met with regulators to talk about how such a drug candidate could be tested in healthy people at risk, before they get sick [Vallabh 2020a]. I’ve been fueled by an internal optimism from day one, but now, thanks to all this progress, I can point and say, I believe this strategy is going to work. We’ve got a very serious shot at developing the first effective drug for prion disease in our lifetime.

With so many other urgent things to do and so little to show for our screens, we decided we’re ready to more or less wind down our small molecule binder program, at least for now. That’s not to say we’ll never do anything in that realm again. We certainly haven’t proven that the task is impossible. Maybe it just takes the right chemical library, the right screen, the right PrP construct. If/as opportunities and collaborations arise, we’ll evaluate each one. But binders are not going to be a major focus going forward. And so, it seemed time to finally write up this paper describing most of what we’d done so far.

reflections

Sonia and I talk to a lot of activated patients racing against time to develop a drug for themselves or a loved one, and running a screening campaign is often high on people’s list, just as it was for us in our early days. I grant that in some cases that might really be the optimal strategy — every disease is different. But on average, I suspect small molecule screening deserves far less priority than many people give it, or at least, that people need to go in with their eyes open to some of the challenges. So I wanted to share a few things I wish someone had told me at the outset. Or in some cases, things that people did tell me but I wish I’d been able to grasp it more fully at the time. If I can summarize these each in one word, they’d be: pipelines, PAINS, platforms, pivots, and priorities.

pipelines

In 2014, I gave a lab meeting presentation describing the therapeutics projects I wanted to undertake targeting PrP. David Altshuler, then chair of my department before he left for Vertex, was presiding. He opened the Q&A with a cannonade of deeply practical advice that I wish I had audio recorded. One piece that stuck with me was, “Figure out ahead of time what your whole pipeline will be for validating and following up. There’s this temptation to think, we’ll worry about that once we have hits, but actually every screen has hits, you see.”

I kept this advice at the tip of my mind, and at a glance, you might say this is an aspect we did really well. We invested a ton of time in building up a rich validation pipeline by getting to know different biophysical assays, figuring out how they worked on our protein, and meticulously blogging the details: ITC, DSC, DSF, TROSY NMR. And if we’d ever gotten to the stage of having a lead compound, we have in hand the validated mouse models, biomarkers, and clinical path needed to help the program advance further.

But every time we thought we had this problem solved, it came up again. It turns out that a validation pipeline is a moving target — what assay you can use depends on many, many, boring-yet-important technical details.

For example, all the assays I listed above require relatively high protein concentration. You’re observing the protein, so you’ll only see a signal if the affinity is strong enough and/or the ligand is sufficiently in excess to make the thermodynamics work out. Thus, it’s very easy to end up limited by compound solubility, and to feel, as we did for our one validated hit, that you have no good place to go besides NMR.

Meanwhile there’s a throughput issue. How many hits does your primary screen give you? Some of these secondary assays were sufficiently protein- or time-intensive that, while they’re great to test one or ten compounds, you’re just not going to do hundreds. And, depending how much you trust your primary screen, you simply may not want to invest the money in re-supplying hundreds of hit compounds in the first place, when each one might cost over a hundred dollars and have a <1% chance of validating. Bottom line, David Altshuler was warning me in 2014 not to think that having primary screen hits is such an asset, and I now realize that in some ways, hits are actually a liability. It’s possible to actually find yourself wishing you had fewer hits, so that you could afford each one the attention it deserves.

PAINS

Screening isn’t something that should be done in isolation. To interpret the data from one screen, you need the data from many other screens as a comparator. One lesson I learned from poking around on Pubchem early on is that if you screen a thousand compounds and get ten hits, they’re often the same ten garbage hits that everyone else got for their protein too. They’re probably not real, and definitely not specific. Avoiding pan-assay interference compounds (PAINS) isn’t solely about putting your SMILES strings through an aggregation predictor, it’s about having a catalog of the same type of screen done on ten or a hundred other targets.

This, too, is something that people did tell us early on, and we did listen. Yet we learned over and over again that it’s not so simple. Even if you can do your screen at an institution that has done hundreds of them, those data may not be as comparable as you would hope. Sure, the same assay has been run before for other targets. But has it been run with the same compound library at the same concentration? With the same protein concentration? On the same instrument? Processed through the same software pipeline? Is there still institutional memory — do the people who ran those screens still work here and can they tell you what else might have been different about those screens that would not immediately occur to you?

platforms

Related to both of the above points, a realization that became more and more clear to us over these five years is that success comes in direct proportion to how much you can plug into platforms. Platforms meaning, someone has an assay set up, they run it for lots of targets, and you can plug in your target and click go and compare it to all their other results. Over these years we actually tried a lot of other things that didn’t even make it into the current paper, because we never got past assay development or initial hit interpretation. Though we didn’t find any strong hits, the screens we describe in this paper are the ones that were relatively successful, in the sense that we at least got a fairly interpretable negative result. And I chalk that up to the fact that we were able to plug into the experience and expertise of, say, Broad’s NMR platform or Novartis’s FAST lab.

And zooming out to a meta level, small molecule screening is inherently kind of a “throw a lot of things at the wall and see what sticks” approach. The prior for any one compound is incredibly low, the search space incredibly large, the potential for going down rabbit holes enormous. What we saw in our collaboration with Ionis Pharma is that oligonucleotide therapeutics are an entirely different story — it does involve screening sequences, but the prior is high and the pipeline from in silico to in cellulo to optimization to in vivo potency is, comparatively speaking, clockwork. It’s a platform technology. I suspect that the same is true, to varying degrees, of modalities like monoclonal antibodies, enzyme replacement, etc. I think it’s even true to a degree of certain small molecule programs where you start from a particular chemical scaffold known to be capable of targeting a specific class of proteins. In those cases perhaps you end up with a clear assay readout, a more circumscribed chemical space, and an obvious list of candidate off-targets to assess.

It’s easy for patient-scientists to feel, as we did, that even if small molecules have a low prior they have to try. But if your target fits into anything remotely resembling a platform technology, then make sure you give that approach due priority, because its prior is just so very much higher.

pivots

An overarching challenge throughout all of this is figuring out when to pivot. Lots of people gave us the advice that failure was expected. What no one pointed out is that this expectation makes it rather difficult to determine when to give up. When can one reject the null hypothesis of the expected amount of failure, in order to conclude that something just isn’t working at all? More formally, if you thought something had reasonable odds of success, say 50/50, then you’d only have to fail about 6 times to conclude that the odds were in fact worse. If you think something only has a 5% shot of success, then you have to fail close to 80 times to reach this conclusion. (You can plug in your own numbers with binom.test in R). I would be curious to hear how teams in industry reach the ultimate decision of when to give up on a particular approach. My suspicion is that this isn’t an area where scientific method comes into play — I suspect it’s often a complicated, messy negotiation that depends more on available resources, and on how badly you want the target, more than any hard numbers.

priorities

Perhaps, if there’s no hard science to deciding when to pivot, the key thing is to circle back repeatedly to the questions of 1) what else is there to do, and 2) for each thing, what will you learn if you fail?. In screening, if you end up without a validated hit or a lead you can take forward in the pipeline, you could come out close to empty handed. (Or worse — holding something you think is real, and wasting many years developing it!) When I look back at our small molecule programs, I’ll grant that it did teach us some stuff. We got good at producing and characterizing our protein, which turned out to be critical for biomarker assays. We got good at doing biophysical assays on it, which helped us investigate and rule out an aptameric mechanism of action for ASOs [Reidenbach & Minikel 2019]. And given our level of commitment to our target, there is certainly some value to learning that this target is indeed, it appears, a difficult one for small molecules, where binders must be fairly rare in chemical space.

But looking at everything else we did over the same five-year timespan, the “negative” results that we got in those areas taught us so much more. Finding that all PrP peptides in CSF behave similarly by mass spec [Minikel & Kuhn 2019] helped us build confidence in our biomarker. Discovering that fluid biomarkers were usually normal in asymptomatic people at high genetic risk [Vallabh 2020b] informed our clinical path [Vallabh 2020a]. Learning that some genetic mutations don’t cause disease helped define our patient population [Minikel 2016]. In each case, we had focused on an open question where we had or could build the tools to answer it and where we valued the answer either way. Confirming and rejecting the null hypothesis were equally valuable outcomes. For small molecule screening, much as I can point to some value the projects generated, there’s no question that the goal was to find a binder, and not finding one was just not nearly as exciting.

Last year I blogged about the financial models governing rare disease drug development and I made the case that for patient-scientists, setting out discover the actual drug itself is most often not going to be the most productive thing you can do. There are lots of other things that most diseases neeed in order to be “developable” but that in most cases, neither pharma nor government will support: defining the patient population, cataloguing natural history, developing biomarkers and clinical outcome measures, validating the target and the therapeutic hypothesis, generating animal models, and so on. Compared to screening, these are all activities where the odds of success are far better and where the value of a “negative” result is also far higher. And I didn’t appreciate at the outset that they’re usually not solved problems — prion disease has seemingly been studied to death for four decades and been the subject of two Nobel prizes, and we’re so lucky that so much was known, but there was still a ton we had to do. In most rare diseases, those areas will be even more important and even more uncharted.

As I always caveat, every disease is different and I don’t assume that what we learned for prion disease and PrP fits everyone’s story. But I hope that at least this post can serve as a slightly deeper dive on some of the challenges that may lie ahead if you want to move forward with screening.